Four Don’t Dos That We Already Did With AI

Artificial intelligence has been portrayed in science fiction as a savior or destroyer of humanity, so which is it?

No one expected the age of Artificial Intelligence (AI) would be upon us so quickly. Many of the things that had been promised, like self-driving cars and full service robot valets, seemed to always meet delays and disappointment.

Then a chat bot called ChatGPT came along, and the world changed overnight. Suddenly, a program could talk to us, understand us, and even answer our questions just like a real person would.

AI could also generate new content, from images to video to music. And it could create new programs and applications, simply by interpreting what we wanted it to make.

The nerd in me thrilled. But dark questions lurked.

In the world of Star Trek, “synthetic” life forms ultimately were banned because of the danger they posed to sentient life. In the Terminator movies, Skynet set out to destroy all of humankind.

Science fiction often has the prescience that hard science alone does not. So the advent of the AI age in our own world had me wondering.

How powerful will AI become? How much will it displace real humans in our economy, potentially causing massive dislocation? And most ominously, can we control it, or will our new AI servants eventually become our masters?

When dealing with any new technology, especially one that can grow and spread at a logarithmic rate, we should be asking some important questions and setting some important boundaries and safeguards.

But did we miss that chance with AI? And what can we do about it now? This is today’s subject in The Big Picture.

— George Takei

Machine learning researcher and renowned author Max Tegmark, who joined 1,000 thought and industry leaders in calling for a pause in developing systems more powerful than the current ChatGPT-4, recently wrote an OpEd for TIME magazine.

In his piece, Tegmark compared AI to the asteroid in the movie “Don’t Look Up.” He walked through all the ways we were dealing (or not dealing) with the threat that seemed to mirror the way the fictional U.S. government and people within the film were dealing with a planet-destroying asteroid on a collision course with Earth.

It was a bit tongue-in-cheek, but toward the end Tegmark made an astute observation.

It made my stomach drop.

Tegmark observed, “If you’d summarize the conventional past wisdom on how to avoid an intelligence explosion in a ‘Don’t-do-list’ for powerful AI” that it might start with these four don’ts:

☐ Don’t teach it to code

☐ Don’t connect it to the internet

☐ Don’t give it a public API

☐ Don’t start an arms race

Here’s the bad news.

On each of these dont’s, we’ve already done it.

We Taught It to Code

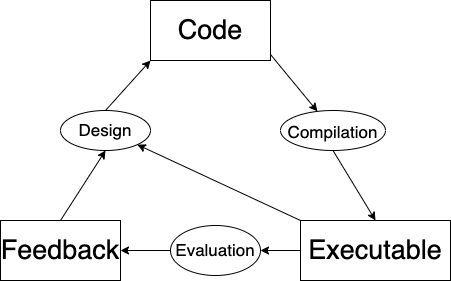

It seemed a natural extension of ChatGPT’s powers. Coding is long, tedious work when humans perform it. It’s prone to error, and those errors can compound.

But tell ChapGPT to code it, and it can do it quickly.

It is also generally error free because it’s trained on the logic and the syntax of the major programming languages. This leaves humans freed up to do other things, like designing, debugging and testing.

Having a bot do the coding can also act as a great leveler, allowing anyone who has an idea to execute on it simply by using plain language commands. Soon, you won’t have had to learn how to code in order to create amazing computer programs.

The bot will do it for you.

For all the wonders of having a chat bot write the software, there is a risk. Tegmark warns of something called “recursive self-improvement,” which is a fancy way of saying the machine learns better how to learn better. With each successful coding, it can build upon that lesson and do it faster and more efficiently the next time.

But that could eventually leave humans not even understanding what the basic code is or how it got better. One day, perhaps not long from now, only the machines will understand how they did it, and we will just have to trust that it works.

They will pull far, far ahead.

We Hooked It to the Internet

ChatGPT works off something called a Large Language Model (LLM), in which oodles of information are fed into the AI so that it has a whole universe of examples to choose from. But where does it draw its ability to answer questions?

ChatGPT plugged into the internet on March 23, 2023. It began to draw on third-party knowledge and databases, including the web. That gave it as much access as, well, anyone on the web might have.

That’s a lot.

OpenAI, the creator of ChatGPT, did that in order to grant its product the ability to pull the best answers possible from the web. It still gets things wrong, because the web is full of errors, and it’s still sorting out what is correct from incorrect. But it’s getting better.

But again, giving an AI access to all the information collected by humanity and stored on the web means we have granted it enormous power. In the wrong hands, it can use that information against us, as Tegmark warns, it can “gain power” over us.

A human with all of the power of the internet behind it would be nearly unstoppable. What makes us think an AI with that power would only use it for good, or that a bad actor couldn’t hijack that AI to do very, very bad things?

We Gave It a Public API

Speaking of bad actors, another thing Tegmark warned about was giving ChatGPT a “public API.” API is short for “Application Programming Interface.” It’s the way third parties can harness the power of ChatGPT for use in their own products.

For example, since its launch in November of 2022, ChatGPT’s technology has been added to many popular products and services, including Shopify, Snapchat and Duolingo. That permits great steps forward for those companies, who can use ChatGPT to offer better user experiences and things like personalized recommendations.

Anyone who wanted to incorporate ChatGPT could do so because it had a public API. That’s great for individuals, small companies, and start-ups.

But Tegmark notes that this means nefarious actors can use ChatGPT in their code—and some have already begun to do so. For example, it could be used to “turbocharge fraud and scams,” according to the FTC.

There’s even a really bad version already out there ominously called Chaos GPT, where its anonymous user supposedly instructed the bot to be a “destructive, power-hungry, manipulative AI." It immediately searched for the most destructive weapons known to humankind on the web.

It’s just one scary example of how this technology can be misused quite readily.

The Arms Race Has Already Begun

There’s big money and bragging rights behind AI milestones. Google was caught flat-footed by the sudden rise and popularity of ChatGPT, which is backed by Microsoft and presents an existential threat to Google’s search market dominance.

Google responded with its own AI, called Bard.

The Chinese are out with their own AI as well, with internet giant Baidu announcing its chatbot "Ernie."

Yes, there’s an Ernie and Bard.

And despite the letter signed by 1,000 scientists and machine learning experts like Tegmark, noticeably absent from that list was CEO Sam Altman from OpenAI, which got the jump on the competition.

Tegmark warns that the arms race prioritizes development speed over safety. As companies race to deploy newer and faster AI, there isn’t time to make sure the products that are emerging won’t have really bad consequences for society at large.

So Are We Doomed? Is It Too Late?

When my father wanted me to control my behavior as a child, he would often ask me to assess what I had done wrong and explain how I should adjust my behavior. I was the one who knew best what to do with my own self, so he encouraged that assessment.

I suppose that’s why I’m a believer in allowing powerful AI to establish its own boundaries, and to do it now, before it starts breaking too many of the rules or gets twisted into some chaos agent by bad actors.

So I asked ChatGPT-4 this morning this question: “How do we stop AI from harming humanity? Give me three top ways.”

I play around with Chap-GPT a lot, and based on my experience, it had to think about this question a bit longer than normal. Here’s what it came back with, word for word.

Rigorous Safety Research: Just as with any other powerful technology, the safe use of AI requires extensive research and testing. We need to ensure that AI systems behave as intended, even in extreme or unexpected situations, and that they can’t be easily exploited for harmful purposes.

Transparency and Accountability: It’s crucial that AI systems be transparent and explainable, so we can understand how they’re making decisions. This not only helps prevent harmful outcomes, but also allows us to hold the right people or organizations accountable when things do go wrong.

Legal and Ethical Frameworks: Developing robust legal and ethical frameworks that guide the use of AI is another essential step. These frameworks should include strong regulations and safeguards to prevent misuse of AI, as well as mechanisms for dealing with harm caused by AI. This could involve things like mandatory impact assessments for new AI systems, or requirements for AI developers to carry insurance against potential harm.

Out of curiosity, I asked it to draft model legislation regulating AI in the U.S., and within seconds it began spitting out a high level draft of The Responsible Artificial Intelligence Act.

It was a striking example of how we can take advantage, today, of the incredible speed and power of ChapGPT, while it is in its infancy, to help it grow up into a responsible, non-harmful teenager in a few months.

But the time to act is now.

We already missed the boat on some key chances to keep this potentially dangerous technology in check.

But it’s not too late to keep it from turning into Skynet.

Hmmm. Invent machines that can essentially think for themselves, give them no guardrails and access to them by some truly bad people.

Why, what could possibly go wrong????????

What a grand act of service you have performed here! The response of ChatGPT-4 shows that programming is still at the level of conscience. Such legislation as the program itself recommends is an imperative.